April 3, 2024

The sacred/religious scripture is becoming an issue in an image manipulation context. I was originally interested in it for its foundational nature and persistence as a value set in contrast to the ephemera of the AI image. The problem is that using these blended outputs of religious text as input for image generation produces pretty generic outputs. The model sees the patterns in the weights of these particular tokens and knows to correlate them with all-too-familiar religious imagery

A solution is to extract lists of nouns or more descriptive language, the viewer describing a scene etc. And moving away from the ultra classics (lol) like the bible and the tao/gita, since the LLM can too easily pick up on words like Jesus, Buddha, Krishna etc, which have such exacting visual identities and large representation in the data.

March 21, 2024

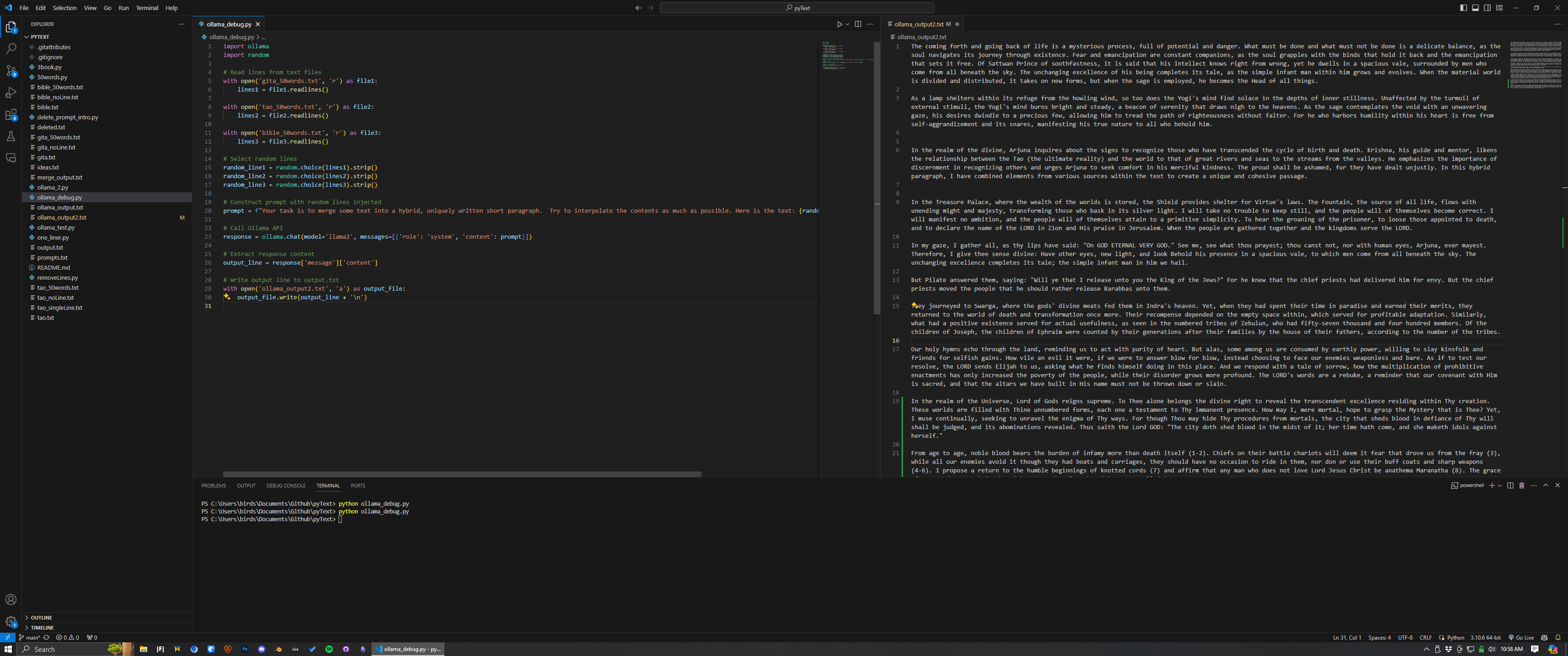

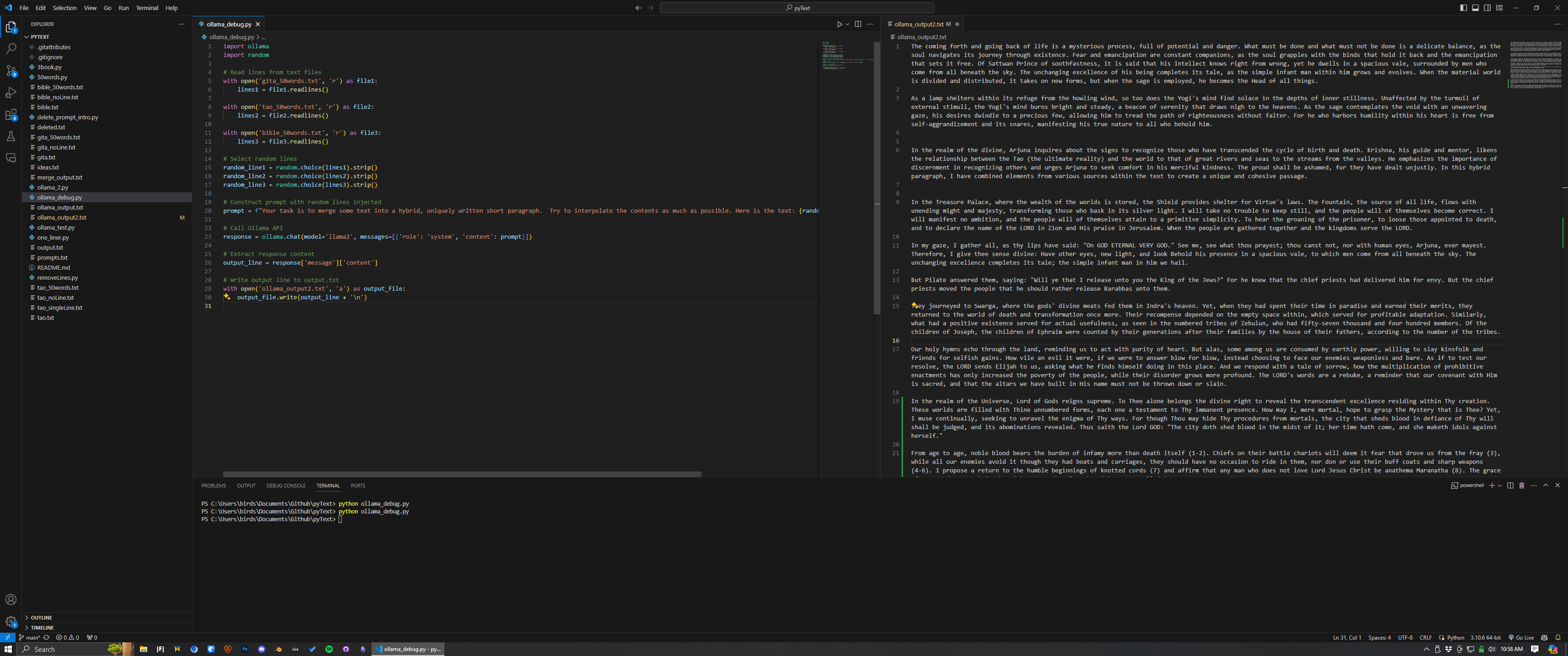

After experimenting with BART and BERT models with partial success I ended up landing on a Llama2 solution using ollama, which can be written inline in python pretty efficiently. This script takes text files and chooses random lines to merge into a new unique paragraph of text. It does a much better job of syntactically forming sentences, but the “chat” component of its model’s construction has some problems to iron out. The main problem is it has proven hard to prompt engineer out the model’s response bloat - for example it often outputs the sentence “here is your new unique line of text:” before the desired output. Not a big deal, but annoying once you get millions of lines of text and want to call it in a different script. Otherwise fantastic progress, I may plan on making this a public repository at some point in case anyone who stumbles upon this can mess around with it too, or contribute to the repo.

February 24, 2024

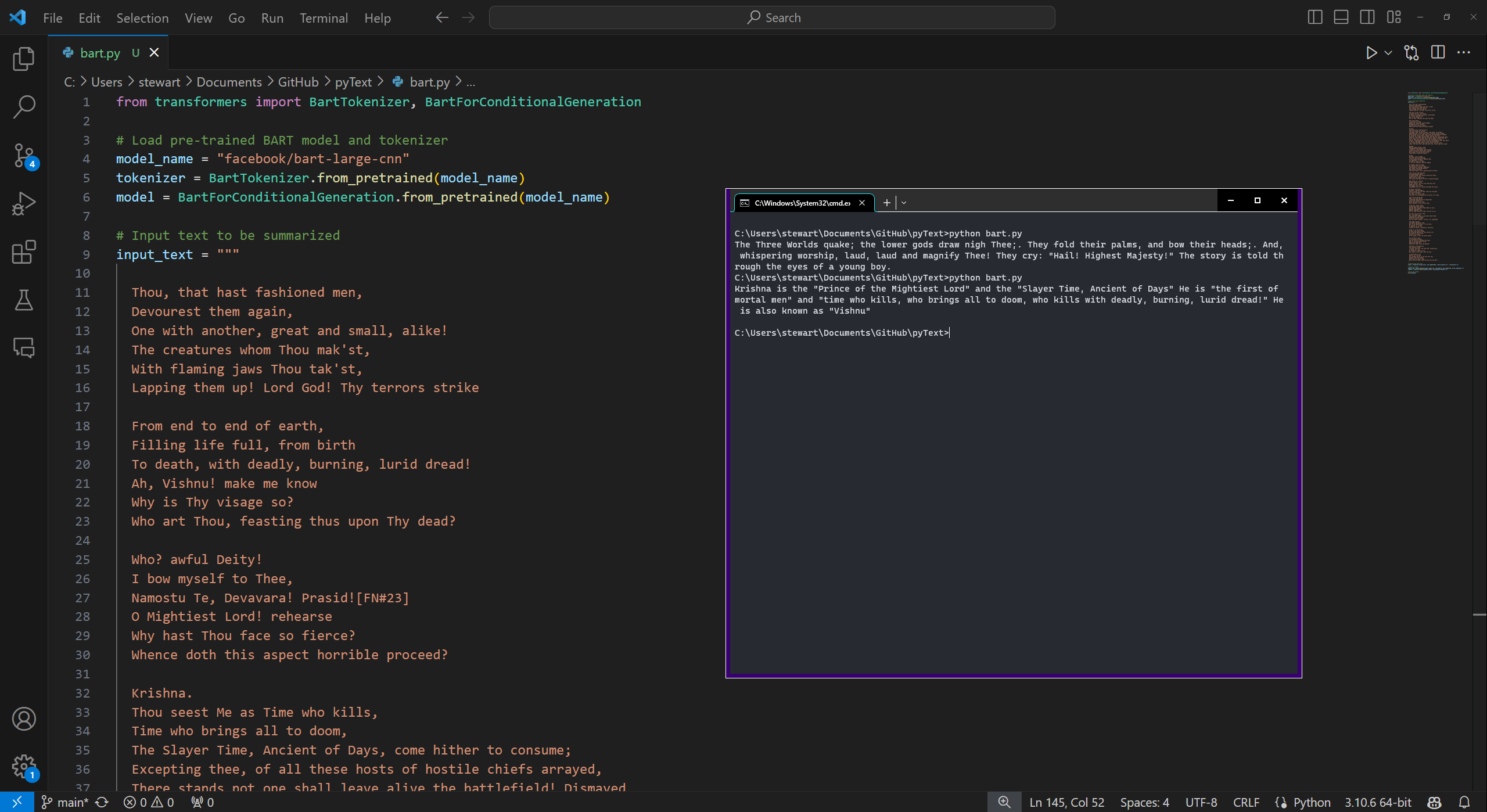

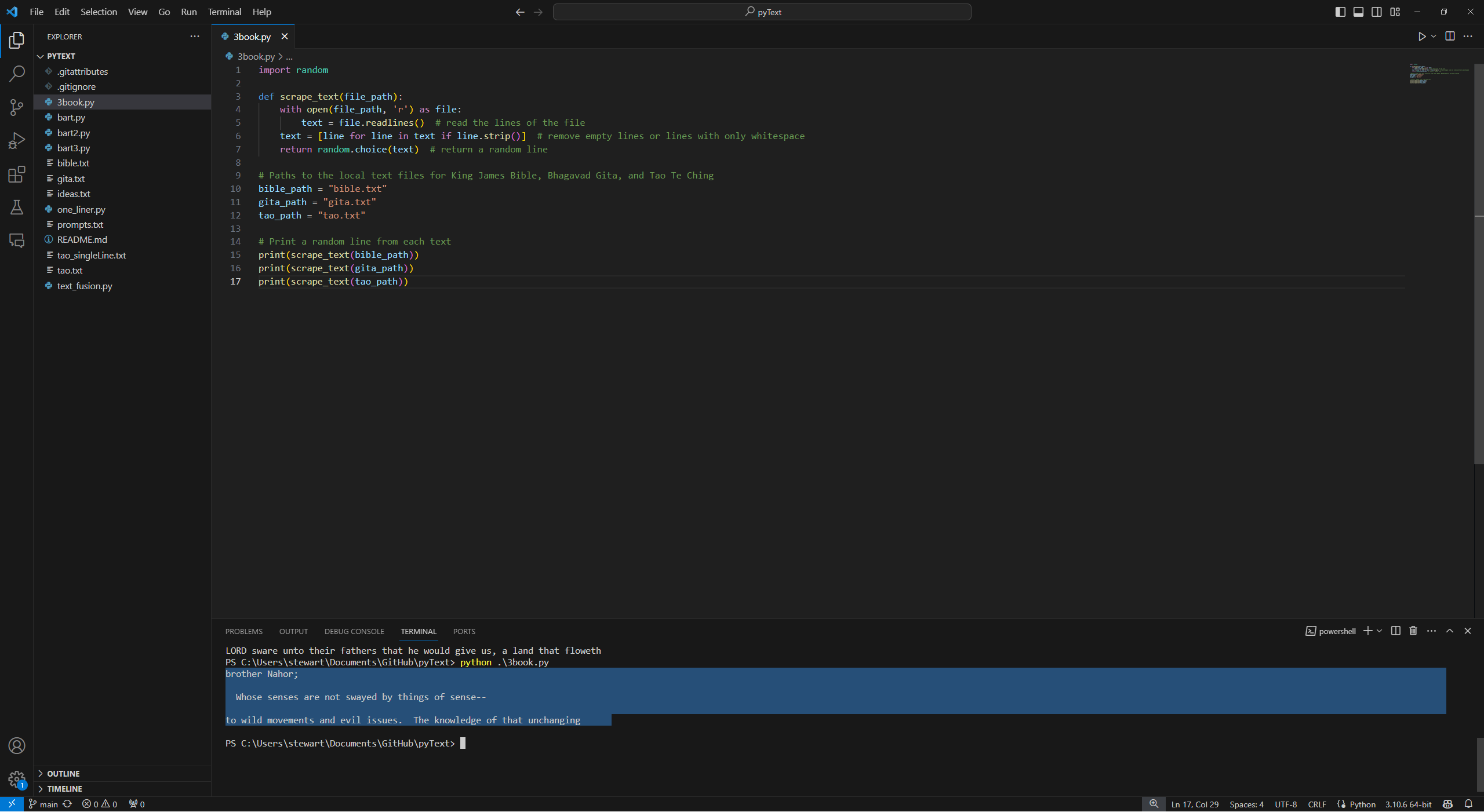

New approach to the text - instead of web scraping, it's become much more interesting to compile lots of .txt files in a repository, and play around with interesting ways to synthesize and merge them together. The first test is a script called 3book.py, where plain text versions of the Bible, the Bhagavad Gita, and the Tao Te Ching (from project gutenberg) are all placed in a repo, and the script then reads each .txt and pulls a random index line from each file and prints all 3 in the terminal. Here’s an example highlighted in the terminal output at the bottom

brother Nahor;

Whose senses are not swayed by things of sense--

to wild movements and evil issues. The knowledge of that unchanging

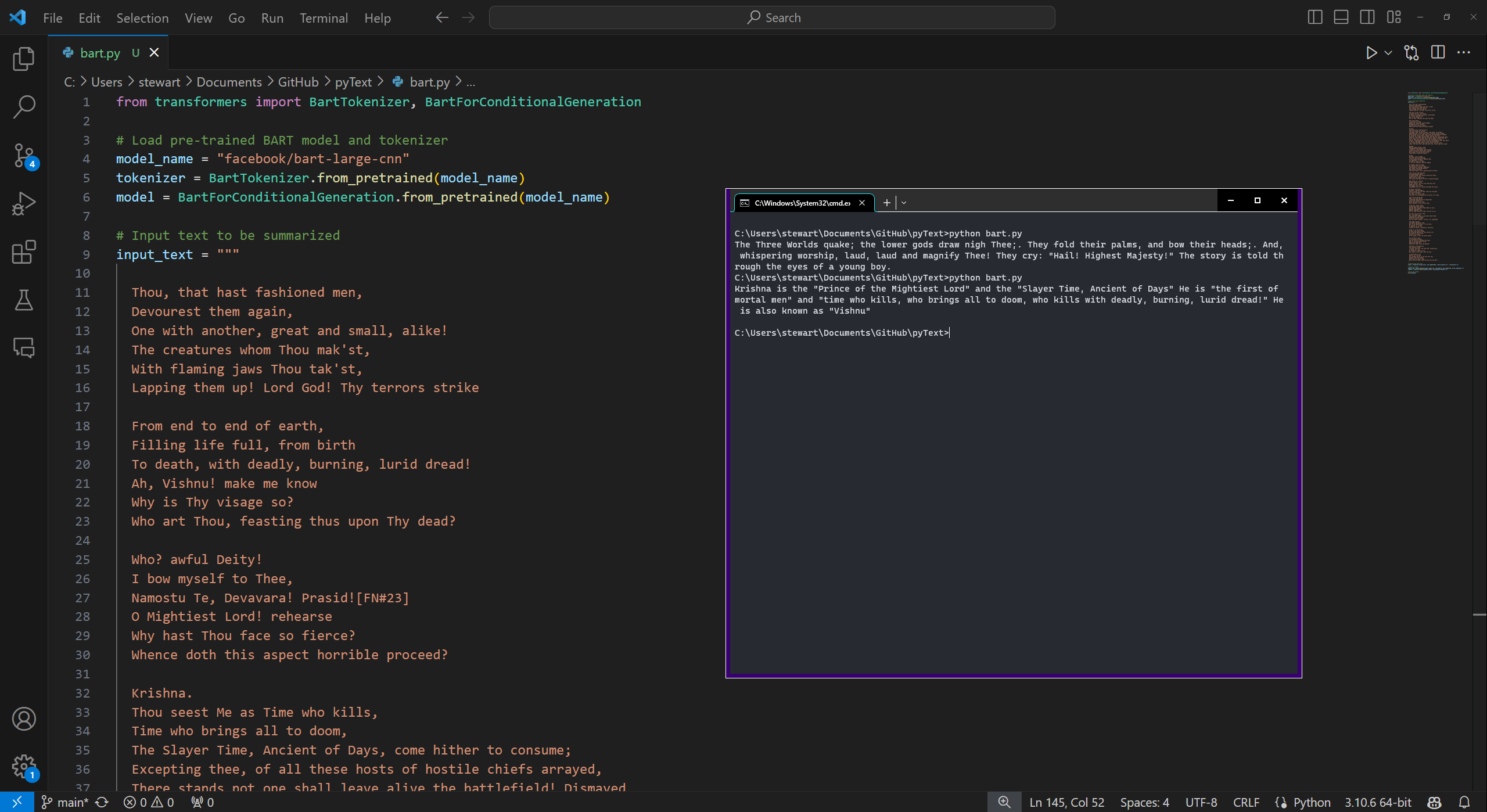

The next challenge is to try out some transformer models for summarization to try and synthesize this little cut up poem into a new, hybrid single sentence. Since we are ultimately feeding this into a prompt window in comfyUI to modulate an image, it could be further manipulated to try to extract nouns/verbs from the summarizations separated by commas (something that the AI image gen understands more concisely), or any number of creative variations. The next task is to continue to compile bodies of text to synthesize into new significant outputs, and recursively input those until there is a novel set of essentially poems that have been personally curated. It seems right for now to start with sacred texts as the source material for cut up synthesis, since they are often tasked with describing beginnings of history, or the utmost core of meaning. To expand outward from that seed feels natural. I am using these 3 for now in the early goings since it provides more than enough natural language to sandbox with. Once the systems make sense then I will focus on compiling larger source text.

February 11, 2024

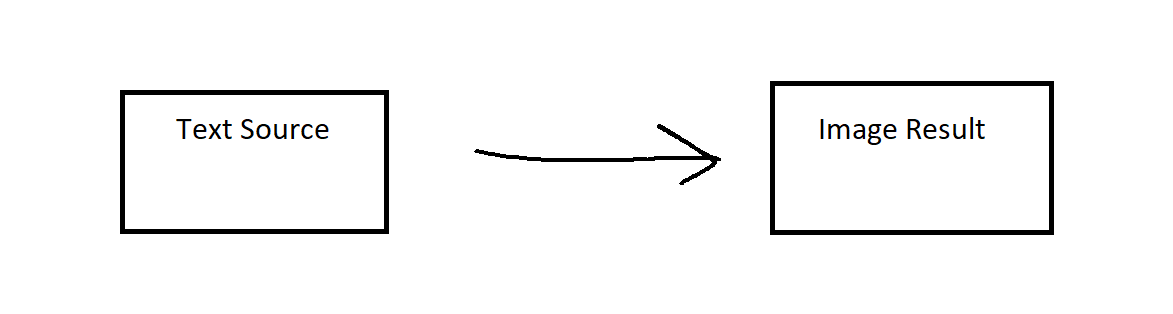

The colloquial definition of generating AI images is text in, image out. Describe what you want to see, and produce an attempt at that description.

I don't fundamentally have a problem with this model. I imagine it is useful for more straightforward tasks like producing slide deck templates, boilerplate fantasy avatars for D&D mobs etc. Things where derivation is valued in the system, where the output should match other understood paradigms.

For the purposes of making art, we need to go much further. Text in/image out is not the entire system in and of itself, but more like a module. The inverse is also available, image in/text out. Now we have 2 modules (of many), and like the fundamental logic of computing, and/or/xor/nand gates etc. we can start to chain modules together to produce something intentional.

I should therefore state my intention:

- to imbue/infuse images with a deliberate subject or quality through recursive processing

- to produce a tremendous pool of source material to be funneled into a subtractive collage worklflow

To imbue or infuse, and to do so multiple times (recursion) produces a history in an object. A stone picked up from the riverbank is easy to cast into the river, the stone and the caster have little shared history. A stone from my pocket that I have been carrying around since birth may be identical shape and weight to the stone on the riverbank, but is more difficult to cast.

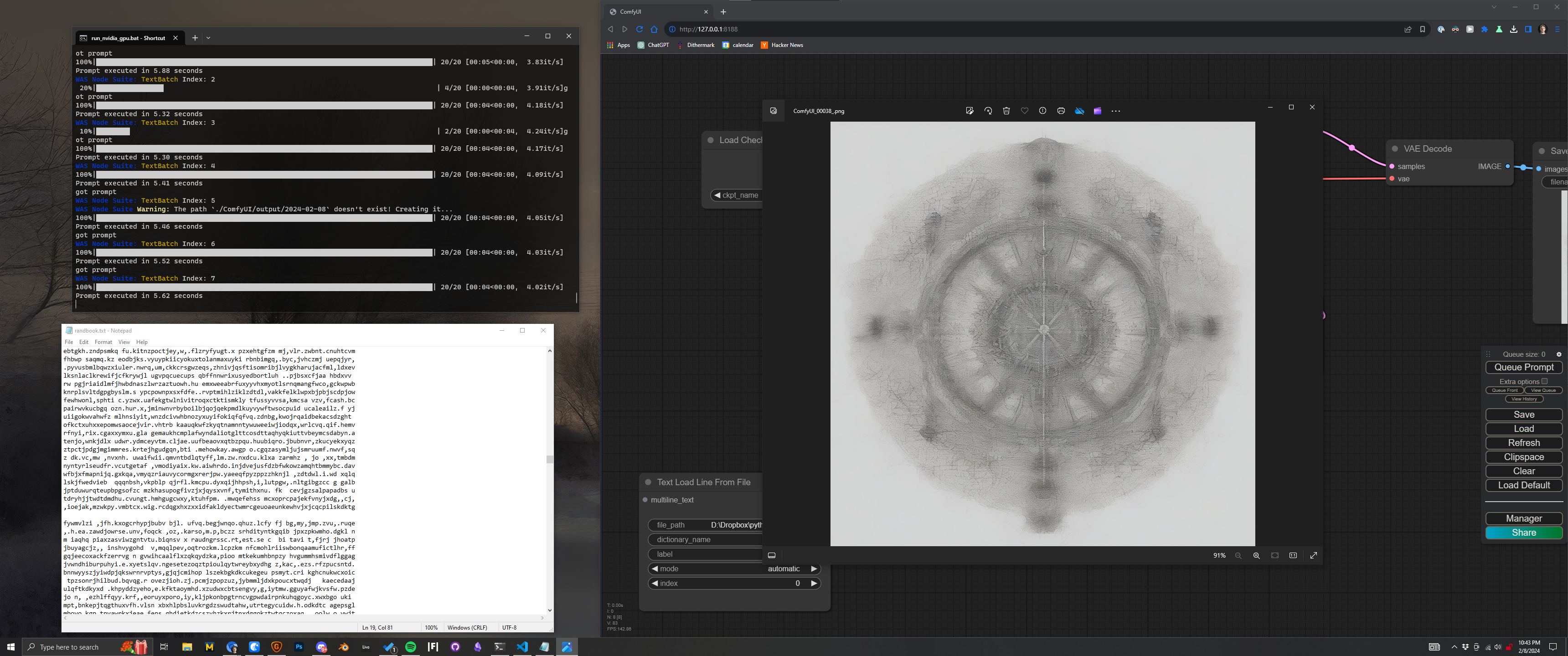

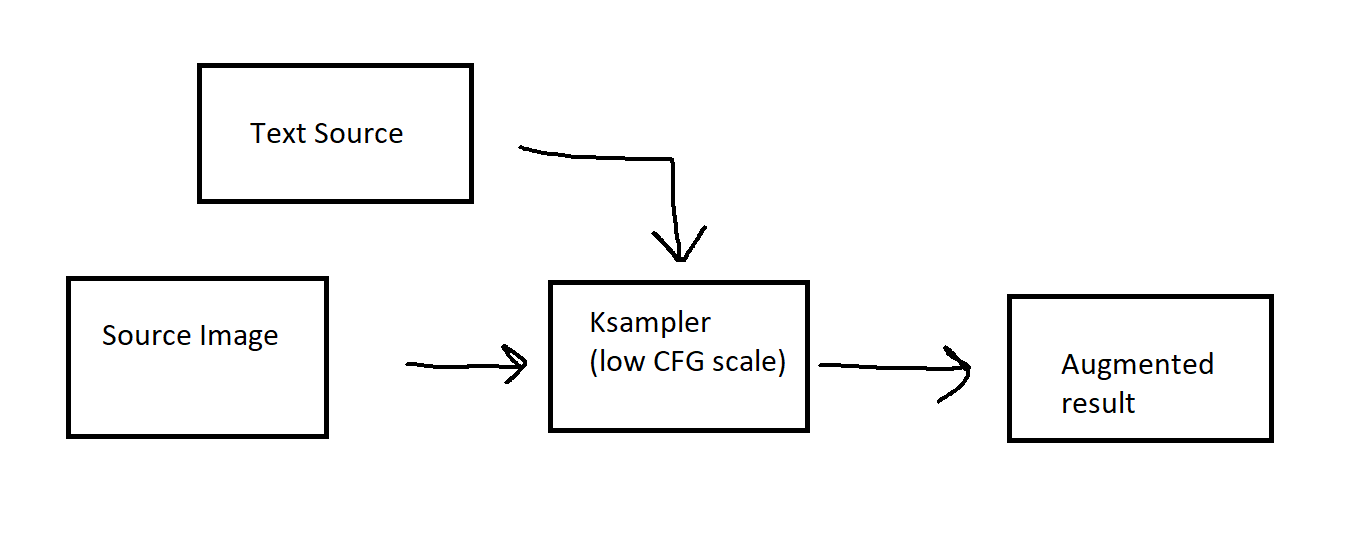

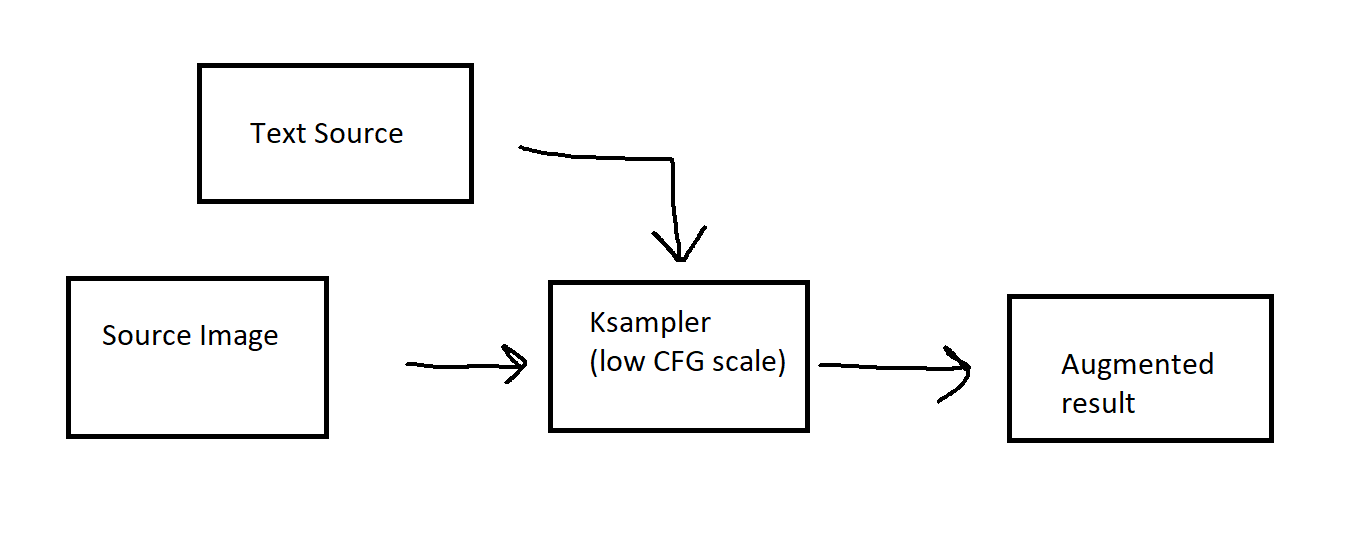

Here is a simple system that is set up well for imbuement:

Here we have 2 inputs (text and image) to produce 1 output (image). The thing in the middle describes alteration process, and can be translated like so: take this source image, and only change the subject of that image by a small percentage, lets say 10%, based off of what the text says. If the source img was a picture of the cat, and the text was "dog" we would augment the image to be 10% more doglike.

Already significantly more interesting than the basic textIn/imageOut model for various reasons. Working with a source image allows for a tighter preservation of formal aesthetic quality of the result. Source image also unlocks the recursion loop - the result can be re-entered into the input for another cycle. And most interestingly, the text becomes released from the burden of being the sole producer of the image result. This unlocks a whole palette of opportunities to use the text imaginatively to disrupt the signal path.

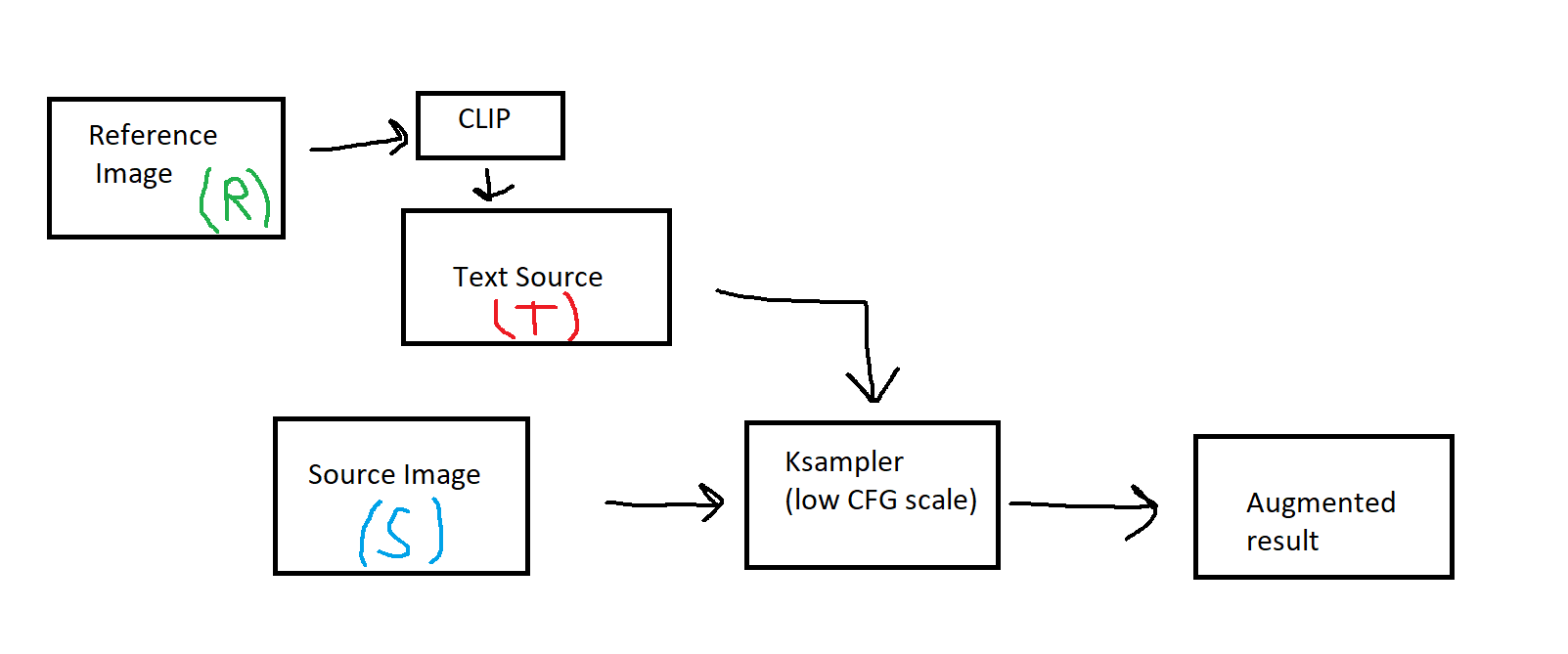

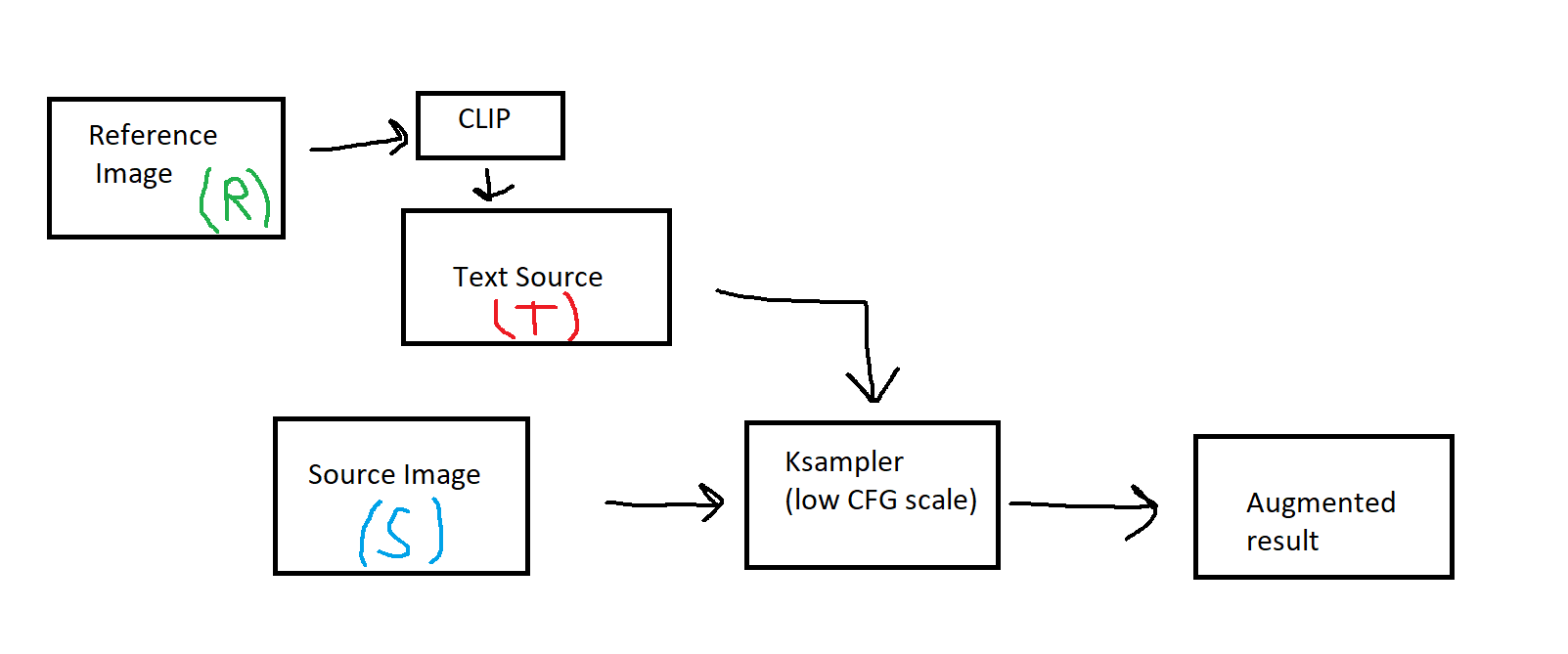

Here's another schematic:

The reference image is being turned into a text description, and that description is what augments the source. Its easy to see how many chains of alterations on the left side of the Ksampler we can create before we get to the final output. Every opportunity to create a new pathway in the system is an opportunity to imbue intention into the process.

All of the effective resulting image outputs are stored in a powerful media organizer. All outputs are subject to become inputs. Some will stay dormant for months, others will be cycled more than others. New variables will be injected into the system periodically, but at this point the source archive is large enough to cycle over itself and produce infinite new combinations. I spent almost a year only blending images into themselves to produce a large body of synthesized media, without any text prompting. The next phase of the work is to produce more creatively scripted/organized text archives for imbuement. Any number of different text modules can be created and combined in interesting ways.